RENDERING, SHADERS, GPU compute, & optimization

GAME-READY 2d fluid simulation (2025):

These videos feature a GPU-accelerated 2D fluid simulation written from scratch in my C++ game engine. It works by simulating a velocity field and mass transfer through a grid of "pseudo-particles." Each particle has a cell-relative position, velocity, mass, and pressure. Viscosity is achieved via attraction/repulsion forces. The simulation is performed on the GPU and is split into chunks which helps mitigate the performance penalties of GPU-CPU read-backs. CPU-side game state updates can be performed on a per-chunk, per-need basis. Audio is generated through a sparse grid of "springy probes" that accumulate momentum based on the fluid velocity field. The probes allow efficient detection of various fluid conditions such as flow intensity and vorticity, which is used to spawn appropriate sound emitters with varying gain curves. Networking is achieved by local simulation with a server-authoritative “divergence heuristic” rollback system for high-latency clients.

earth renderer (manta engine) (2025):

Voxel Renderer Prototype (2024):

Wrote a quick voxel rendering prototype within my C++ game engine (Manta) as a stress test for the engine’s rendering abstraction layer/API. the demo features chunk LOD swapping, frustum culling, dynamic per-vertex shadow mapping, fog, and subtle world curvature. The highest quality LOD scheme attempts to swap when voxels are 0.5 pixels or smaller. All in all, it performs pretty well with a scene of ~2 billion blocks at 8 bytes/vertex on my system (~1000fps). There is still plenty of room for optimizations as I'm not sorting draw calls or batching distant LODs (octree).

Raven software - call of duty (2021 - 2024)

As an engineer on the Rendering team, few of the features I worked on included:

Expanded the engine’s large-scale terrain system & associated tooling to give artists more freedom when painting layered textures/materials. See this SIGGRAPH 2023 presentation on Call of Duty’s terrain system for more context.

Wrote new shaders for bullet particles to create the illusion of volume and energy conservation.

Implemented low-level engine support for new categories of purchasable in-game content with careful consideration for data, streaming, and rendering constraints.

Made improvements to the runtime texture and mesh streaming system for Warzone’s Caldera map. Caldera introduced airplanes featuring the fastest player-controlled speeds seen in Call of Duty necessitating optimizations in streaming heuristics to reduce churn on low-end hardware/consoles.

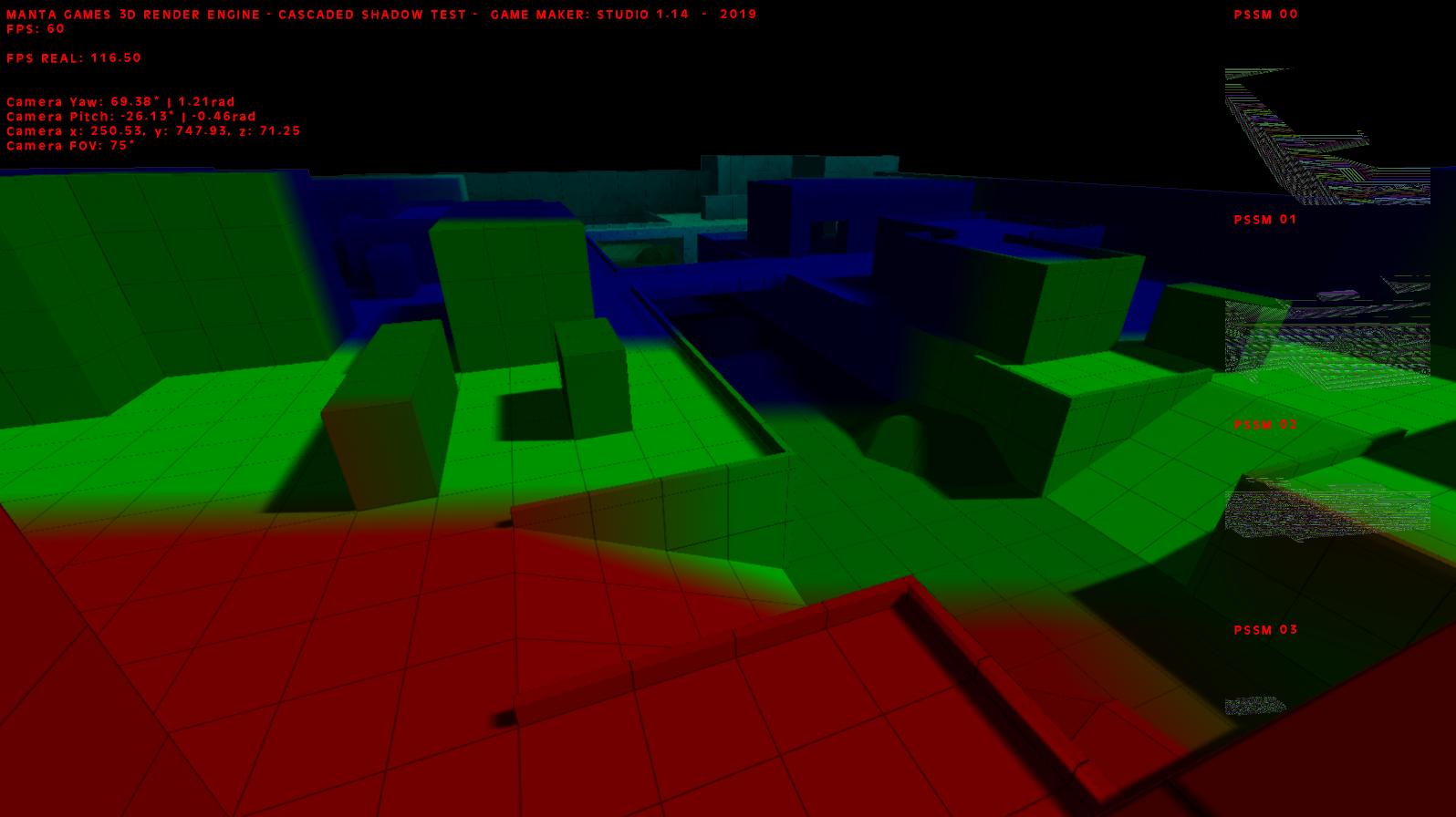

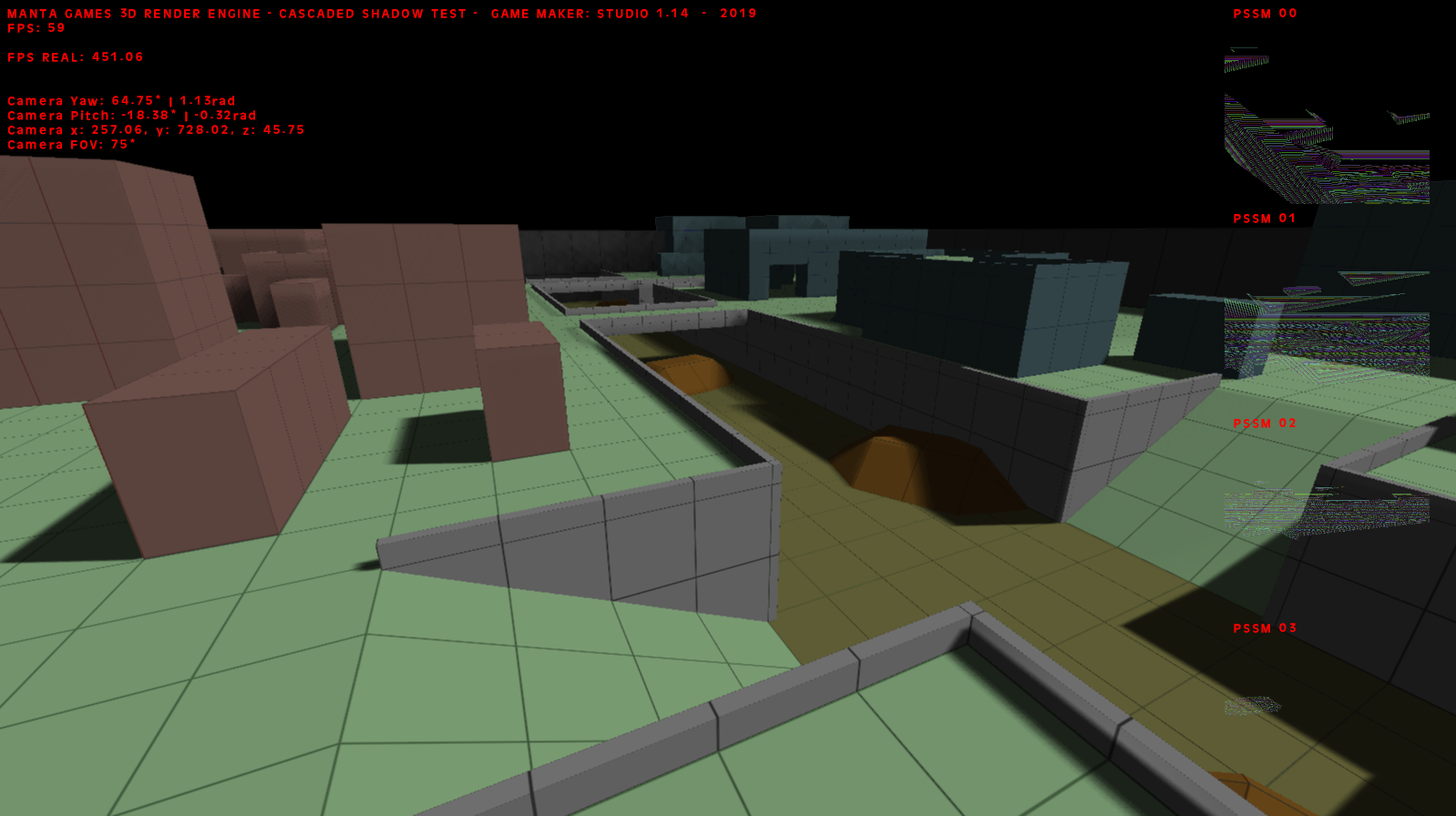

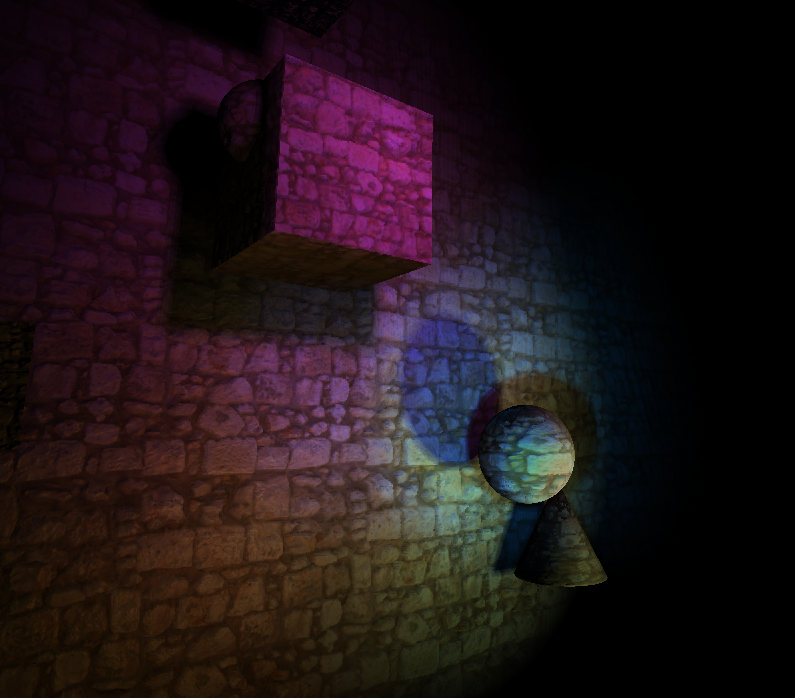

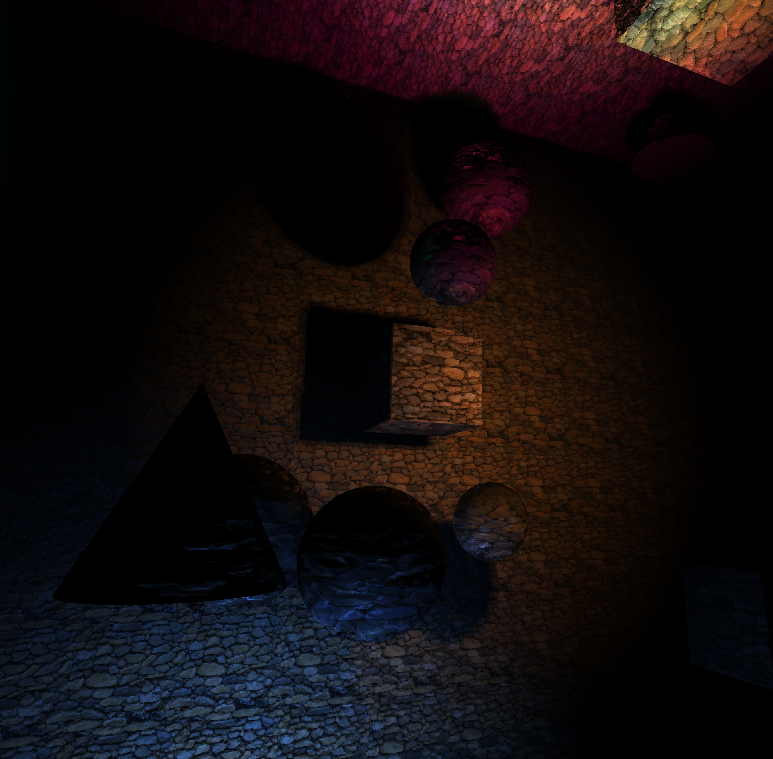

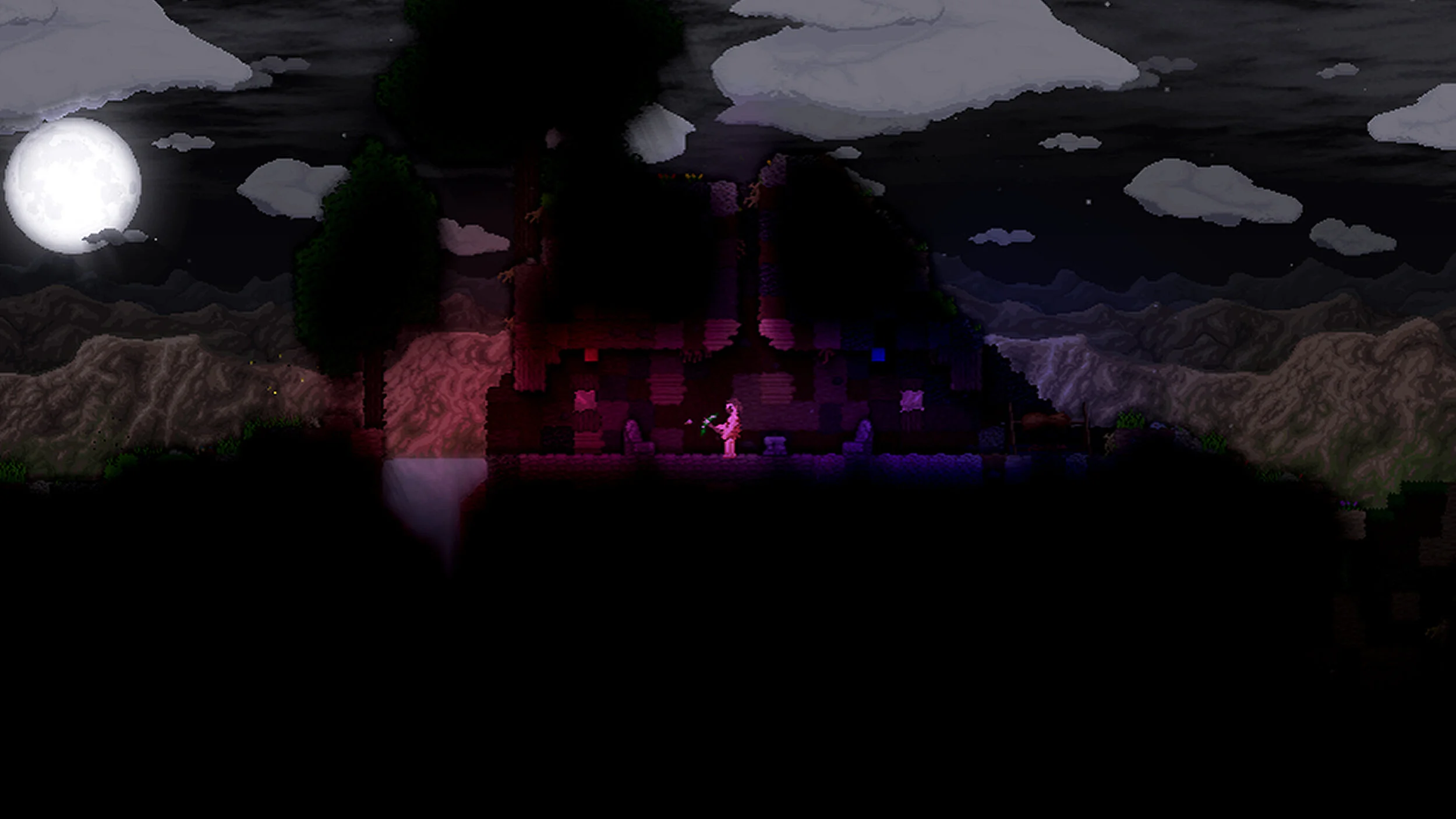

deferred renderer (2018 - 2019):

The videos above showcase a custom 3D deferred rendering pipeline that I developed for Game Maker: Studio 1.4 while studying at University. It was created with the intention of expanding the scope of the engine’s limited 3D capabilities.

The renderer supported the following: cascaded shadow mapping, parallax occlusion mapping (self-shadowing), screen-space ambient occlusion, point & spot lighting

VIRTUAL REALITY eXPERIMENTS (2014, 2021)

The videos above showcase a couple VR applications that I’ve created using the Unity game engine with the Oculus Rift SDK. VR Scuba Simulator was made as a final project for my CS 579 (Virtual Reality) course at UW-Madison in 2021.

Gallery: